We enabled the link preload web feature support in Firefox 78, at this time only at Nightly channel and Firefox Early Beta and not Firefox Release because of pending deeper product integrity checking and performance evaluation.

What is "preload"

Web developers may use the the Link: <..>; rel=preload response header or <link rel="preload"> markup to give the browser a hint to preload some resources with a higher priority and in advance.

Firefox can now preload number of resource types, such as styles, scripts, images and fonts, as well as responses to be later used by plain fetch() and XHR. Use preload in a smart way to help the web page to render and get into the stable and interactive state faster.

Don't misplace this for "prefetch". Prefetching (with a similar technique using <link rel="prefetch"> tags) loads resources for the next user navigation that is likely to happen. The browser fetches those resources with a very low priority without an affect on the currently loading page.

Web Developer Documentation

There is a Mozilla provided MDN documentation for how to use <link rel="preload">. Definitely worth reading for details. Scope of this post is not to explain how to use preload, anyway.

Implementation overview

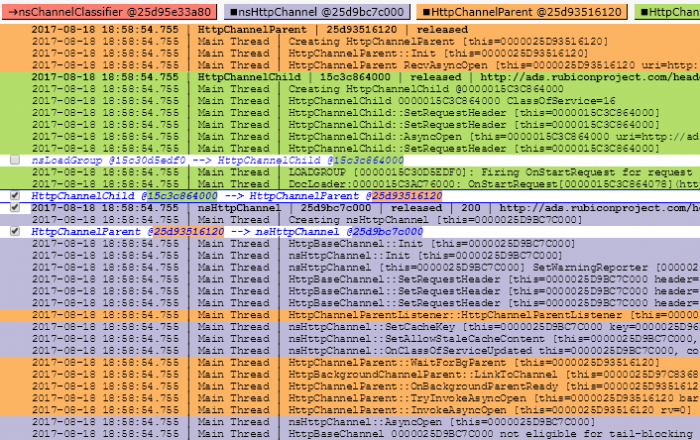

Firefox parses the document's HTML in two phases: a prescan (or also speculative) phase and actual DOM tree building.

The prescan phase only quickly tokenizes tags and attributes and starts so called "speculative loads" for tags it finds; this is handled by resource loaders specific to each type. A preload is just another type of a speculative load, but with a higher priority. We limit speculative loads to only one for a URL, so only the first tag referring that URL starts a speculative load. Hence, if the order is the consumer tag and then the related <link preload> tag for the same URL, then the speculative load will only have a regular priority.

At the DOM tree building phase, during which we create actual consuming DOM node representations, the respective resource loader first looks for an existing speculative load to use it instead of starting a new network load. Note that except for stylesheets and images, a speculative load is used only once, then it's removed from the speculative load cache.

Firefox preload behavior

Supported types

"style", "script", "image", "font", "fetch".

The "fetch" type is for use by fetch() or XHR.

The "error" event notification

Conditions to deliver the error event in Firefox are slightly different from e.g. Chrome.

For all resource types we trigger the error event when there is a network connection error (but not a DNS error - we taint error event for cross-origin request and fire load instead) or on an error response from the server (e.g. 404).

Some resource types also fire the error event when the mime type of the response is not supported for that resource type, this applies to style, script and image. The style type also produces the error event when not all @imports are successful.

Coalescing

If there are two or more <link rel="preload"> tags before the consuming tag, all mapping to the same resource, they all use the same speculative preload - coalesce to it, deliver event notifications, and only one network load is started.

If there is a <link rel="preload"> tag after the consuming tag, then it will start a new preload network fetch during the DOM tree building phase.

Sub-resource Integrity

Handling of the integrity metadata for Sub-resource integrity checking (SRI) is a little bit more complicated. For <link rel=preload> it's currently supported only for the "script" and "style" types.

The rules are: the first tag for a resource we hit during the prescan phase, either a <link preload> or a consuming tag, we fetch regarding this first tag with SRI according to its integrity attribute. All other tags matching the same resource (URL) are ignored during the prescan phase, as mentioned earlier.

At the DOM tree building phase, the consuming tag reuses the preload only if this consuming tag is either of:

- missing the

integrityattribute completely, - the value of it is exactly the same,

- or the value is "weaker" - by means of the hash algorithm of the consuming tag is weaker than the hash algorithm of the link preload tag;

- otherwise, the consuming tag starts a completely new network fetch with differently setup SRI.

As link preload is an optimization technique, we start the network fetch as soon as we encounter it. If the preload tag doesn't specify integrity then any later found consuming tag can't enforce integrity checking on that running preload because we don't want to cache the data unnecessarily to save memory footprint and complexity.

Doing something like this is considered a website bug causing the browser to do two network fetches:

<link rel="preload" as="script" href="script1.js"><script src="script1.js" integrity="sha512-....">

The correct way is:

<link rel="preload" as="script" href="script1.js" integrity="sha512-...."><script src="script1.js">

Specification

The main specification is under W3C jurisdiction here. Preload is also weaved into the Fetch WHATWG specification.

The W3C specification is very vague and doesn't make many things clear, some of them are:

- What all types or minimal set of types the browser must or should support. This is particularly bad because specifying a type that is not supported is not firing neither

loadnorerrorevent on the<link>tag, so a web page can't detect an unsupported type. - What are the exact conditions to fire the

errorevent. - How exactly to handle (coalesce) multiple

<link rel="preload">tags for the same resource. - How exactly, and if, to handle

<link rel="preload">found after the consuming tag. - How exactly to handle the

integrityattribute on both the<link preload>and the consuming tag, specifically when it's missing one of those or is different between the two. Then also how to handleintegrityon multiple link preload tags.